These AI failures aren’t necessarily the fault of their creators.

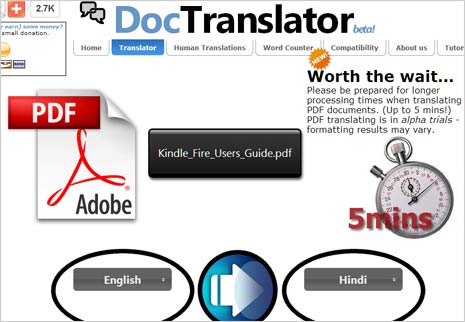

Google online translator generator#

Meanwhile, the AI language generator GPT-3 – which wrote an entire article for the Guardian in 2020 – recently showed that it was also shockingly good at producing harmful content and misinformation. Google Translate has been accused of stereotyping based on gender, such as its translations presupposing that all doctors are male and all nurses are female. To the dismay of their creators, AI algorithms often develop racist or sexist traits. Our method could be used in other fields of AI to help the technology reject, rather than replicate, biases within society. Now, our team has found a way to retrain the AI behind translation tools, using targeted training to help it to avoid gender stereotyping. But when left unchallenged, biases can emerge in the form of concrete negative attitudes towards others. Overwhelmingly, the tools conform to the stereotype, opting for the feminine word in German.īiases are human: they’re part of who we are. When translating from English to German, translation tools have to decide which gender to assign English words like “cleaner”. Such tools are especially vulnerable to gender stereotyping, because some languages (such as English) don’t tend to gender nouns, while others (such as German) do.

But the artificial intelligence (AI) behind them is far from perfect, often replicating rather than rejecting the biases that exist within a language or a society. Online translation tools have helped us learn new languages, communicate across linguistic borders, and view foreign websites in our native tongue.

0 kommentar(er)

0 kommentar(er)